Vincent Sanders: A picture is worth a thousand words

When Sir Tim made a band the first image on the web back in 1992, I do not imagine for a moment he understood the full scope of what was to follow. I also do not imagine Marc Andreessen understood the technical debt he was about to introduce and that fateful day in 1993 when he proposed the img tag allowing inline images.

Many will argue of course that the old adage of my title may not apply to the web, where if it were true, every page view would be like reading a novel! and many of those novels would involve cats or one pixel tall colour gradients.

Leaving aside the philosophical arguments for a moment it takes web browser author a great deal of effort to quickly and efficiently put those cat pictures in front of your eyeballs.

NavigatingImages are navigated by a user in a selection of ways, including:

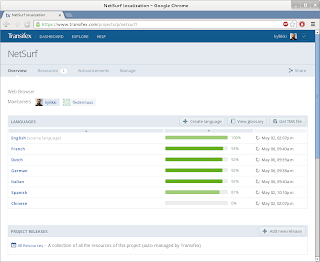

Pre render processingWhen the image object fetch is completed the browser must process the image for rendering which is where this gets even more complicated. I shall use how this works in NetSurf as I know that browsers internals best, but operation is pretty similar in many browsers.

The content fetch will have performed basic content sniffing to determine the received objects mime type. This is necessary because a great number of servers are misconfigured and a disturbingly large number of images served as png are really jpegs etc. Indeed sometimes you even get files served which are not images!

Upon receipt of enough data to decode the image header, for the detected mime type, the images metadata is extracted. This metadata usually includes things like size and colour depth.

If the img tag in the original source document omitted the width or height of the image the entire document render may have to be reflowed at this point to allow the correct spacing. The reflow process is often unsightly and should be avoided. Additionally at this stage if the image is too large to handle or an unhandled format the object will be replaced with the "broken" image icon.

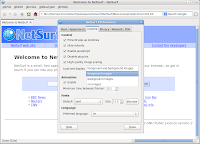

Often that will be everything that is done with the image, when I added "lazy" image conversion to NetSurf we performed extensive profiling and discovered well over 40% of images on visited pages are simply never viewed by the user but that small (100 pixel on a side) images were almost always displayed.

This odd distribution comes down to how images are used in modern web pages, they broadly fall into two categories of "decoration" and "content" for example all the background gradients and sidebar images etc. are generally small images used as decoration whereas the cat picture above is part of the "content". A user may not scroll down a page to see content but almost always gets to "view" the decoration.

RenderingThe exact browser heuristics used differ as to when the render operation is performed but they all have a similar job to perform. When i say render here this may be possibly as an "off screen" view if they are actually on another tab etc. Regardless the image data must be converted from the source data (a PNG, JPEG etc.) into a format suitable for the browsers display plotting routines.

The browser will create a render bitmap in whatever format the plotting routines require (for example the GTK plotters use a Cairo image surface) , use an image library to unpack the source image data (PNG) into the render bitmap (possibly performing transforms such as scaling and rotation) and then use that bitmap to update the pixels on screen.

The most common transform at render time is that of scaling, this can be problematic as not all image libraries have output scaling capabilities which results in having to decode the entire source image and then scaling from that bitmap.

This is especially egregious if the source image is large (perhaps a multi megabyte jpeg) but the width and height are set to produce a thumbnail. The effect is amplified if the user has set the image cache size limit to a small value like 8 Megabytes (yes some users do this apparently their machines have 32MB of RAM and they browse the web)

In addition the image may well require tiling (for background gradients) and quite complex transforms (including rotation) thanks to CSS 3. Add in that javascript can alter the css style and hence the transform and you can imagine quite how complex the renderers can become.

CachingThe keen reader might spot that repeated renderings of the source image (e.g. because window is scrolled or clipped) result in this computationally expensive operation also being repeated. We solve this by interposing a render image cache between the source data and the render bitmaps.

By keeping the data in the preferred format, image rendering performance can be greatly increased. It should be noted that this cache is completely distinct from the source object cache and relates only to the rendered images.

Originally NetSurf used to perform the render conversion for every image as it was received without exception, rather than at render time, resulting in a great deal of unnecessary processing and memory usage. This was originally done for simplicity and optimising for "decoration" images.

The rules for determining what gets cached and for how long are somewhat involved and the majority of the code within the current implementation NetSurf uses is metrics and statistic generation to produce better decisions.

There comes a time at which this cache is no longer sufficient and rendering performance becomes unacceptable. The NetSurf renderer errs on the side of reducing resource usage (clearing the cache) at the expense of increased render times. Other browsers make different compromises based on the expected resources of the user base.

FinallyHopefully that gives a reasonable overview to the operations a browser performs just to put that cat picture in front of your eyeballs.

And maybe next time your browser is guzzling RAM to plot thousands of images you might have a bit more appreciation to exactly what it is up to.

Many will argue of course that the old adage of my title may not apply to the web, where if it were true, every page view would be like reading a novel! and many of those novels would involve cats or one pixel tall colour gradients.

NavigatingImages are navigated by a user in a selection of ways, including:

- Direct navigation to an image like this cat picture is the original way images were viewed i.e not inline and as a separate document not involving any html. Often this is now handled by constructing a generated web page within the browser with the image inline avoiding the need for explicit image content handling.

- An inline img tag (ironically it really does take thousands of words to describe) which puts the image within the web page not requiring the user to navigate away from the document being displayed. These tags are processed as the Document Object Model (DOM) is constructed from the html source. When an img tag is encountered a fetch is scheduled for the object and when complete the DOM completion events happen and the rendered page is updated.

- Imported by a CSS stylesheet.

- inline element created by a script

Pre render processingWhen the image object fetch is completed the browser must process the image for rendering which is where this gets even more complicated. I shall use how this works in NetSurf as I know that browsers internals best, but operation is pretty similar in many browsers.

The content fetch will have performed basic content sniffing to determine the received objects mime type. This is necessary because a great number of servers are misconfigured and a disturbingly large number of images served as png are really jpegs etc. Indeed sometimes you even get files served which are not images!

Upon receipt of enough data to decode the image header, for the detected mime type, the images metadata is extracted. This metadata usually includes things like size and colour depth.

If the img tag in the original source document omitted the width or height of the image the entire document render may have to be reflowed at this point to allow the correct spacing. The reflow process is often unsightly and should be avoided. Additionally at this stage if the image is too large to handle or an unhandled format the object will be replaced with the "broken" image icon.

Often that will be everything that is done with the image, when I added "lazy" image conversion to NetSurf we performed extensive profiling and discovered well over 40% of images on visited pages are simply never viewed by the user but that small (100 pixel on a side) images were almost always displayed.

This odd distribution comes down to how images are used in modern web pages, they broadly fall into two categories of "decoration" and "content" for example all the background gradients and sidebar images etc. are generally small images used as decoration whereas the cat picture above is part of the "content". A user may not scroll down a page to see content but almost always gets to "view" the decoration.

RenderingThe exact browser heuristics used differ as to when the render operation is performed but they all have a similar job to perform. When i say render here this may be possibly as an "off screen" view if they are actually on another tab etc. Regardless the image data must be converted from the source data (a PNG, JPEG etc.) into a format suitable for the browsers display plotting routines.

The browser will create a render bitmap in whatever format the plotting routines require (for example the GTK plotters use a Cairo image surface) , use an image library to unpack the source image data (PNG) into the render bitmap (possibly performing transforms such as scaling and rotation) and then use that bitmap to update the pixels on screen.

The most common transform at render time is that of scaling, this can be problematic as not all image libraries have output scaling capabilities which results in having to decode the entire source image and then scaling from that bitmap.

This is especially egregious if the source image is large (perhaps a multi megabyte jpeg) but the width and height are set to produce a thumbnail. The effect is amplified if the user has set the image cache size limit to a small value like 8 Megabytes (yes some users do this apparently their machines have 32MB of RAM and they browse the web)

In addition the image may well require tiling (for background gradients) and quite complex transforms (including rotation) thanks to CSS 3. Add in that javascript can alter the css style and hence the transform and you can imagine quite how complex the renderers can become.

CachingThe keen reader might spot that repeated renderings of the source image (e.g. because window is scrolled or clipped) result in this computationally expensive operation also being repeated. We solve this by interposing a render image cache between the source data and the render bitmaps.

By keeping the data in the preferred format, image rendering performance can be greatly increased. It should be noted that this cache is completely distinct from the source object cache and relates only to the rendered images.

Originally NetSurf used to perform the render conversion for every image as it was received without exception, rather than at render time, resulting in a great deal of unnecessary processing and memory usage. This was originally done for simplicity and optimising for "decoration" images.

The rules for determining what gets cached and for how long are somewhat involved and the majority of the code within the current implementation NetSurf uses is metrics and statistic generation to produce better decisions.

There comes a time at which this cache is no longer sufficient and rendering performance becomes unacceptable. The NetSurf renderer errs on the side of reducing resource usage (clearing the cache) at the expense of increased render times. Other browsers make different compromises based on the expected resources of the user base.

FinallyHopefully that gives a reasonable overview to the operations a browser performs just to put that cat picture in front of your eyeballs.

And maybe next time your browser is guzzling RAM to plot thousands of images you might have a bit more appreciation to exactly what it is up to.